VDR Official X

February 9, 2026

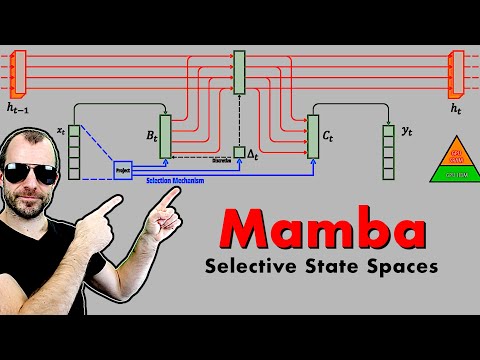

Yannic Kilcher • #mamba #s4 #ssm OUTLINE: 0:00 - Introduction 0:45 - Transformers vs RNNs vs S4 6:10 - What are state space models? 12:30 - Selective State Space Models 17:55 - The Mamba architecture 22:20 - The SSM layer and forward propagation 31:15 - Utilizing GPU memory hierarchy 34:05 - Efficient computation via prefix sums / parallel scans 36:01 - Experimental results and comments 38:00 - A brief look at the code Paper: https://arxiv.org/abs/2312.00752 Abstract: Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers' computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation. Authors: Albert Gu, Tri Dao Links: Homepage: https://ykilcher.com Merch: https://ykilcher.com/merch YouTube: https://www.youtube.com/c/yannickilcher Twitter: https://twitter.com/ykilcher Discord: https://ykilcher.com/discord LinkedIn: https://www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this): SubscribeStar: https://www.subscribestar.com/yannickilcher Patreon: https://www.patreon.com/yannickilcher Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2 Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

Content Summary

This report is generated from research on the following videos, based on the requirements set in Video Deep Research.

Analyze selected videos,

My goal is 📑 Discover Content Intelligence

My role is 🎙️ Consultant/Advisor

I need: 🤵 Client demands assessment

https:...2EB0

Summary

1. Breaking the Quadratic Barrier for Long Sequences

2. Hardware-Driven Speed and Training Efficiency

3. Selective Intelligence That Outperforms Larger Models

Knowledge Snap

Assessment 1: Sequence Length Optimization

🎬 Related Clip

(3)

Video Title

15:28 - 17:32

The video discusses the interesting tradeoffs between transformers and state space models.

00:57 - 03:00

Recurrent neural networks process sequences by updating a hidden state box.

03:10 - 05:10

The model scales to infinite lengths because of its low memory requirements.

Assessment 2: Dynamic Content Selectivity

🎬 Related Clip

(3)

Video Title

12:30 - 17:55

Selective state space models improve on prior work across several axes.

12:52 - 14:52

Prior models were limited by their inability to efficiently select data.

01:05 - 03:10

State space models use parameterized functions to define transitions.

Assessment 3: Parallel Scan Processing

🎬 Related Clip

(3)

Video Title

10:23 - 12:29

Computations can be performed as a parallel scan in one swoop.

10:34 - 12:35

The model looks like a transformer during training because of forward passes.

10:34 - 12:35

The training process allows computing all forward passes of a sequence together.

Assessment 4: Integrated Architecture Blocks

🎬 Related Clip

(3)

Video Title

18:05 - 20:10

The architecture uses convolutions and projections without any attention blocks.

09:40 - 11:45

The architecture consists of selective state spaces and other structural layers.

37:29 - 40:40

The model includes one dimensional convolutions and non-linearities.

Assessment 5: Model Scaling Laws

🎬 Related Clip

(3)

Video Title

11:24 - 17:55

Researchers have conducted experiments on models up to one billion parameters.

11:30 - 17:55

Language modeling scaling laws appear promising for larger parameter counts.

11:45 - 17:55

The architecture provides efficiency for modeling extremely long sequences.

Assessment 6: Linear State Transitions

🎬 Related Clip

(3)

Video Title

07:18 - 09:22

The transitions between hidden states are completely linear.

07:18 - 09:22

There are no non-linearities involved in the time-based hidden state computations.

08:57 - 11:03

The transitions between different time steps remain consistent throughout the sequence.

Evolution of Sequence Modeling via Mamba Architecture

UCZHmQk67mSJgfCCTn7xBfew

👋

Introduction to Linear Time Modeling

00:00 - 06:10

The presenter introduces Mamba as a linear time sequence model utilizing selective state spaces.

🔄

Architectural Comparisons

00:57 - 03:00

Recurrent neural networks and state space models offer unique tradeoffs when compared to Transformers.

📉

Transformer Scaling Problems

01:56 - 03:58

The quadratic growth of memory requirements poses a significant challenge for long sequence lengths.

🚧

Recurrent Model Restrictions

03:58 - 06:03

Standard recurrent models are limited to looking only at the last state and current input.

⚡

State Space Model Efficiency

06:29 - 08:31

State space models utilize convolution operators to compute all sequence outputs in one large swoop.

🎯

The Power of Selectivity

12:09 - 17:55

The selective state space model improves on prior work to match the modeling power of Transformers.

🏗️

Components of Mamba Architecture

18:05 - 20:10

Mamba combines convolutions and gating into an attention free architecture to avoid quadratic bottlenecks.

💾

Hardware Memory Hierarchy

31:49 - 33:49

High-speed SRAM on the GPU is leveraged for matrix multiplications to ensure extreme computational efficiency.

Learning Pathway for Mamba Sequence Modeling

| Stage | Videos |

|---|---|

1. Analyzing Traditional Architectures |  TLINE: 0:00 - Introduction 0:45 - Transformers vs RNNs vs S4 6:10 - What are state space models? 12:30 - Selective State Space Models 17:55 - The Mamba architecture 22:20 - The SSM layer and forward propagation 31:15 - Utilizing GPU memory hierarchy 34:05 - Efficient computation via prefix sums / parallel scans 36:01 - Experimental results and comments 38:00 - A brief look at the code Paper: https://arxiv.org/abs/2312.00752 Abstract: Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers' computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation. Authors: Albert Gu, Tri Dao Links: Homepage: https://ykilcher.com Merch: https://ykilcher.com/merch YouTube: https://www.youtube.com/c/yannickilcher Twitter: https://twitter.com/ykilcher Discord: https://ykilcher.com/discord LinkedIn: https://www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this): SubscribeStar: https://www.subscribestar.com/yannickilcher Patreon: https://www.patreon.com/yannickilcher Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2 Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n |

2. Understanding State Space Basics |  TLINE: 0:00 - Introduction 0:45 - Transformers vs RNNs vs S4 6:10 - What are state space models? 12:30 - Selective State Space Models 17:55 - The Mamba architecture 22:20 - The SSM layer and forward propagation 31:15 - Utilizing GPU memory hierarchy 34:05 - Efficient computation via prefix sums / parallel scans 36:01 - Experimental results and comments 38:00 - A brief look at the code Paper: https://arxiv.org/abs/2312.00752 Abstract: Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers' computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation. Authors: Albert Gu, Tri Dao Links: Homepage: https://ykilcher.com Merch: https://ykilcher.com/merch YouTube: https://www.youtube.com/c/yannickilcher Twitter: https://twitter.com/ykilcher Discord: https://ykilcher.com/discord LinkedIn: https://www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this): SubscribeStar: https://www.subscribestar.com/yannickilcher Patreon: https://www.patreon.com/yannickilcher Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2 Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n |

3. Defining Content-Based Reasoning |  TLINE: 0:00 - Introduction 0:45 - Transformers vs RNNs vs S4 6:10 - What are state space models? 12:30 - Selective State Space Models 17:55 - The Mamba architecture 22:20 - The SSM layer and forward propagation 31:15 - Utilizing GPU memory hierarchy 34:05 - Efficient computation via prefix sums / parallel scans 36:01 - Experimental results and comments 38:00 - A brief look at the code Paper: https://arxiv.org/abs/2312.00752 Abstract: Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers' computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation. Authors: Albert Gu, Tri Dao Links: Homepage: https://ykilcher.com Merch: https://ykilcher.com/merch YouTube: https://www.youtube.com/c/yannickilcher Twitter: https://twitter.com/ykilcher Discord: https://ykilcher.com/discord LinkedIn: https://www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this): SubscribeStar: https://www.subscribestar.com/yannickilcher Patreon: https://www.patreon.com/yannickilcher Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2 Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n |

4. Implementing Data Selectivity |  TLINE: 0:00 - Introduction 0:45 - Transformers vs RNNs vs S4 6:10 - What are state space models? 12:30 - Selective State Space Models 17:55 - The Mamba architecture 22:20 - The SSM layer and forward propagation 31:15 - Utilizing GPU memory hierarchy 34:05 - Efficient computation via prefix sums / parallel scans 36:01 - Experimental results and comments 38:00 - A brief look at the code Paper: https://arxiv.org/abs/2312.00752 Abstract: Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers' computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation. Authors: Albert Gu, Tri Dao Links: Homepage: https://ykilcher.com Merch: https://ykilcher.com/merch YouTube: https://www.youtube.com/c/yannickilcher Twitter: https://twitter.com/ykilcher Discord: https://ykilcher.com/discord LinkedIn: https://www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this): SubscribeStar: https://www.subscribestar.com/yannickilcher Patreon: https://www.patreon.com/yannickilcher Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2 Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n |

5. Evaluating Inference Efficiency |  TLINE: 0:00 - Introduction 0:45 - Transformers vs RNNs vs S4 6:10 - What are state space models? 12:30 - Selective State Space Models 17:55 - The Mamba architecture 22:20 - The SSM layer and forward propagation 31:15 - Utilizing GPU memory hierarchy 34:05 - Efficient computation via prefix sums / parallel scans 36:01 - Experimental results and comments 38:00 - A brief look at the code Paper: https://arxiv.org/abs/2312.00752 Abstract: Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers' computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation. Authors: Albert Gu, Tri Dao Links: Homepage: https://ykilcher.com Merch: https://ykilcher.com/merch YouTube: https://www.youtube.com/c/yannickilcher Twitter: https://twitter.com/ykilcher Discord: https://ykilcher.com/discord LinkedIn: https://www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this): SubscribeStar: https://www.subscribestar.com/yannickilcher Patreon: https://www.patreon.com/yannickilcher Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2 Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n |

6. Optimizing Hardware Usage |  TLINE: 0:00 - Introduction 0:45 - Transformers vs RNNs vs S4 6:10 - What are state space models? 12:30 - Selective State Space Models 17:55 - The Mamba architecture 22:20 - The SSM layer and forward propagation 31:15 - Utilizing GPU memory hierarchy 34:05 - Efficient computation via prefix sums / parallel scans 36:01 - Experimental results and comments 38:00 - A brief look at the code Paper: https://arxiv.org/abs/2312.00752 Abstract: Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers' computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation. Authors: Albert Gu, Tri Dao Links: Homepage: https://ykilcher.com Merch: https://ykilcher.com/merch YouTube: https://www.youtube.com/c/yannickilcher Twitter: https://twitter.com/ykilcher Discord: https://ykilcher.com/discord LinkedIn: https://www.linkedin.com/in/ykilcher If you want to support me, the best thing to do is to share out the content :) If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this): SubscribeStar: https://www.subscribestar.com/yannickilcher Patreon: https://www.patreon.com/yannickilcher Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2 Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n |

Detailed Findings and Insights

1. Hidden State Management

🎬 Related Clip

(2)

Video Title

05:04 - 12:30

The speaker notes there is a hidden state that undergoes a transformation.

03:00 - 05:04

Each next hidden state depends on the previous state and input.

2. Backpropagation Challenges

🎬 Related Clip

(2)

Video Title

04:28 - 12:28

Backpropagation involves moving through all the computations that generate hidden states.

04:35 - 12:30

The process of backpropagation through time is often prohibitively expensive.

3. Continuous Time Modeling

🎬 Related Clip

(2)

Video Title

23:24 - 25:24

State space models were originally developed to handle continuous time systems.

23:24 - 25:24

Applying continuous time models to discrete data requires specific adjustments.

4. Linear Recurrence Loops

🎬 Related Clip

(2)

Video Title

25:42 - 27:46

The model functions as a simple recurrent neural network without non-linearities.

25:54 - 27:58

The model functions as a linear recurrent neural network that dampens state.

5. Efficient Inference Precomputation

🎬 Related Clip

(2)

Video Title

27:58 - 30:00

Constants and learnable parameters can be precomputed for efficiency.

28:30 - 30:34

Parameters are learned and can be precomputed for instant output generation.

6. Layered Structural Hierarchy

🎬 Related Clip

(2)

Video Title

20:49 - 28:49

Different architectural parts are layered on top of each other.

22:01 - 30:01

Residual connections are used to move information through the architecture.